In July 2020, a Canadian man was charged with dangerous driving and speeding in Alberta for allegedly sleeping in a self-piloted Tesla between Edmonton and Calgary. In the December 11th court trial, witnesses claimed that the driver Leran Cai and his passenger were asleep with their seats fully reclined, traveling 150 km/h (93 mph). His next court appearance was scheduled for January 29, 2021, but no information has yet been released on the outcome.

Tesla has a feature called AutoPilot, which enables autonomous driving on the highway, even though drivers are required to keep their hands on the steering wheel at all times to take back control as needed. Drivers must apply a slight amount of torque on the wheel every so often to signal to the system that they are still engaged. Apparently, there are ways to trick that safety feature with weights so you can take a nap. It is unclear if that is what Leran Cai did in this case.

Falling asleep in a Tesla has made many headlines in the past several years. Here are just a few of the incidents:

- Driver Falls Asleep, Wrecks Tesla In South Brunswick, NJ (Patch)

- Video appears to show Tesla driver ‘literally asleep at the wheel’ (USA Today)

- Another Tesla Driver apparently Fell Asleep—here’s what Tesla could do (ArsTechnica)

- Tesla is sued by the family of a Japanese man who was run over and killed by a car on Autopilot after the driver fell asleep behind the wheel (Daily Mail UK)

Tesla announced in October 2020 that all future Tesla vehicles would have self-driving capability. Many articles were written about this announcement, but this headline from The Verge caught our eye: Tesla’s ‘Full Self-Driving’ Beta is here, and it looks scary as Hell. This post also has a subtitle, “Using untrained consumers to validate beta-level software on public roads is dangerous.”

At the end of The Verge post, Ed Niedermeyer, communications director for Partner for Automated Vehicle Education (a group that combines nonprofits with AV automakers such as Waymo, Argo, Cruise and Zoox) said:

“Public road testing is a serious responsibility, and using untrained consumers to validate beta-level software on public roads is dangerous and inconsistent with existing guidance and industry norms. Moreover, it is extremely important to clarify the line between driver assistance and autonomy. Systems requiring driver oversight are not self-driving and should not be called self-driving.”

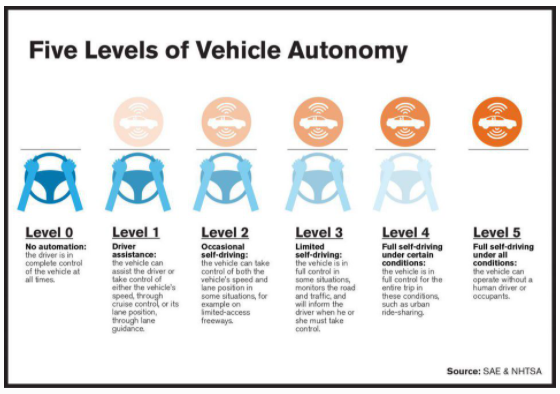

In the United States, motorists and automakers still have no clear-cut autonomous driving guidelines. The National Highway Traffic Safety Administration has recently been criticized by the National Traffic Safety Board (who inspects these driverless car incidents) for not better regulating the autonomous levels of driving between full driver control, Level 0, and complete system control, Level 5.

Also, if you build the technology, does that mean consumers will buy it? Does self-driving technology really make everyone safer on the road? Many questions remain. It doesn’t seem that Tesla, or anyone else, has yet figured out how to make autonomous driving systems foolproof.

Let’s face it, automakers aren’t good at regulating themselves, and bureaucratic government agencies like the NHTSA often try to accommodate everyone and end up protecting no one—especially, in this case, drivers and passengers.

In the meantime, do you want to share a highway with a sleeping driver on autopilot?